Molly Russell, 14, died in 2017, and a two-week investigation into her death came to an end on Friday. The senior coroner concluded that Molly's viewing of social media postings was “likely” a factor in her demise. In the weeks leading up to her death, Molly looked at graphic material on Pinterest and Instagram about self-harm and suicide.

Reporters crowded a courthouse in north London, England, on Friday as they awaited the end of the two-week inquest into the 2017 death of Molly Russell.

Molly, 14, had “died through an act of self-harm while suffering from melancholy and the detrimental impacts of online information,” senior coroner Andrew Walker said in a statement to North London Coroner's Court.

The senior coroner said it was “probable” that social media content viewed by Molly, who was already suffering from a depressive condition, affected her mental health in a way that “contributed to her death in a more than minimum degree.”

Walker stated that it would not “be safe” to leave suicide as a conclusion for himself to ponder.

Inappropriate material for a 14-year-old should not have been viewed by Molly.

According to the inquest, Molly committed suicide in November 2017 after watching 2,100 Instagram posts about self-harm, suicide, and sadness in the preceding six months. Her Pinterest board has 469 additional photographs on related subjects.

Walker discovered that Molly had accounts with a number of internet platforms, including Instagram and Pinterest, which displayed material that was “not safe” for a youngster to view.

Walker claimed that the algorithms of these websites and applications led to “binge times” of Molly viewing upsetting images, videos, and texts—some of which she hadn't specifically asked to see.

Walker claimed to have seen internet content that “no 14-year-old should be able to access” because it was “especially graphic.”

Molly had saw something “extremely frightening, distressing,” said child psychiatrist Dr. Navin Venugopal on Tuesday, leaving him unable to sleep for “a few weeks.”

Walker claimed in his conclusion that some of the material “romanticised” self-harm and discouraged asking for support from people who might be able to assist.

He went on to say that some of the material portrayed self-harm and suicide as a “inevitable result of an illness that could not be healed from.”

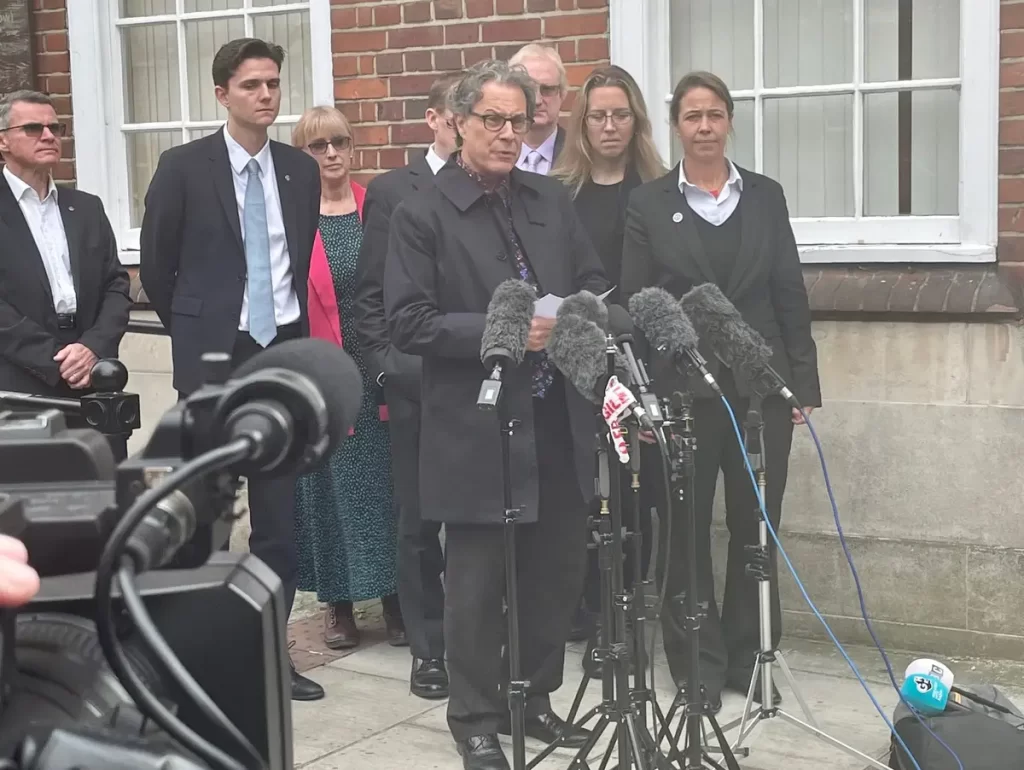

Ian Russell, Molly's father, spoke briefly to media outside the Coroner's Court after the inquiry was finished.

We've heard a lot in the past week about one heartbreaking narrative, Molly's story, he said. Sadly, there are currently far too many people who are similarly impacted.

“At this point, I simply want to stress that even though things seem hopeless, there is always hope. If you're hurting, please talk to someone you trust or seek support from one of the many amazing organisations rather than engaging with potentially harmful online stuff,” she said.

Later that day, during a news conference, Russell questioned remarks made before the inquiry on Monday by a senior Meta official. The majority of Molly's posts, according to Elizabeth Lagone, director of health and wellbeing policy at Meta, the company that owns Instagram, are “safe” for kids to view.

A top Meta official was quoted as saying that Molly was exposed to a safe stream of content that complied with the platform's rules, according to Russell. “My daughter Molly would probably still be alive if this crazy trail of life-sucking stuff was safe.”

A senior Pinterest official said last week that the site was “not safe” when Molly used it during the investigation. The company's head of community operations, Jud Hoffman, expressed sadness that Molly had access to explicit material on the network and apologised.

The senior coroner will send letters to Pinterest, Meta, the UK's Department for Digital, Culture, Media, and Sport, and the telecom regulating organisation, Ofcom.

“The decision should stun Silicon Valley,” said one commentator.

According to activists, the historic decision may force social media corporations to assume responsibility for the protection of minors on their platforms.

The verdict “should send shockwaves across Silicon Valley,” according to Sir Peter Wanless, CEO of the British child safety organisation NSPCC. According to Sky News, he continued by saying that digital firms “must expect to be held to account when they put the safety of children second to commercial decisions.”

“Online safety for our children and young people ought to be a precondition, not an afterthought,” tweeted Prince William on Friday.

Meta and Pinterest explained their strategies for reacting to the verdict in statements made public after the hearing.

We're committed to making sure that Instagram is a great experience for everyone, especially teenagers, and we will carefully review the coroner's full report when he submits it, according to a Meta spokeswoman.

“We'll keep working with the top independent experts in the world to make sure the adjustments we make provide the best possible protection and assistance for kids,” the statement reads.

According to a statement from a Pinterest representative, the business “listened very carefully” to what the coroner and Molly's family had to say during the inquest.

According to the statement, Pinterest is dedicated to making continuous changes to make sure that the platform is secure for all users, and the coroner's report will be carefully taken into account.

“Over the past few years, we have strengthened our policies regarding self-harm content, offered pathways to compassionate support for individuals who require it, and extensively invested in developing new technologies that automatically identify and take action on self-harm content,” says the company.